Protecting Student Data in the Age of AI

A Federal Framework for Transparency, Accountability, and Auditability

DOI:

https://doi.org/10.60690/4a78va88Keywords:

Data Privacy, Data Practices, AI training data, Data Sovereignty, Ownership, Ethics, Capital, Human Rights, Marx, Stanford University, Risk Management, AutonomyAbstract

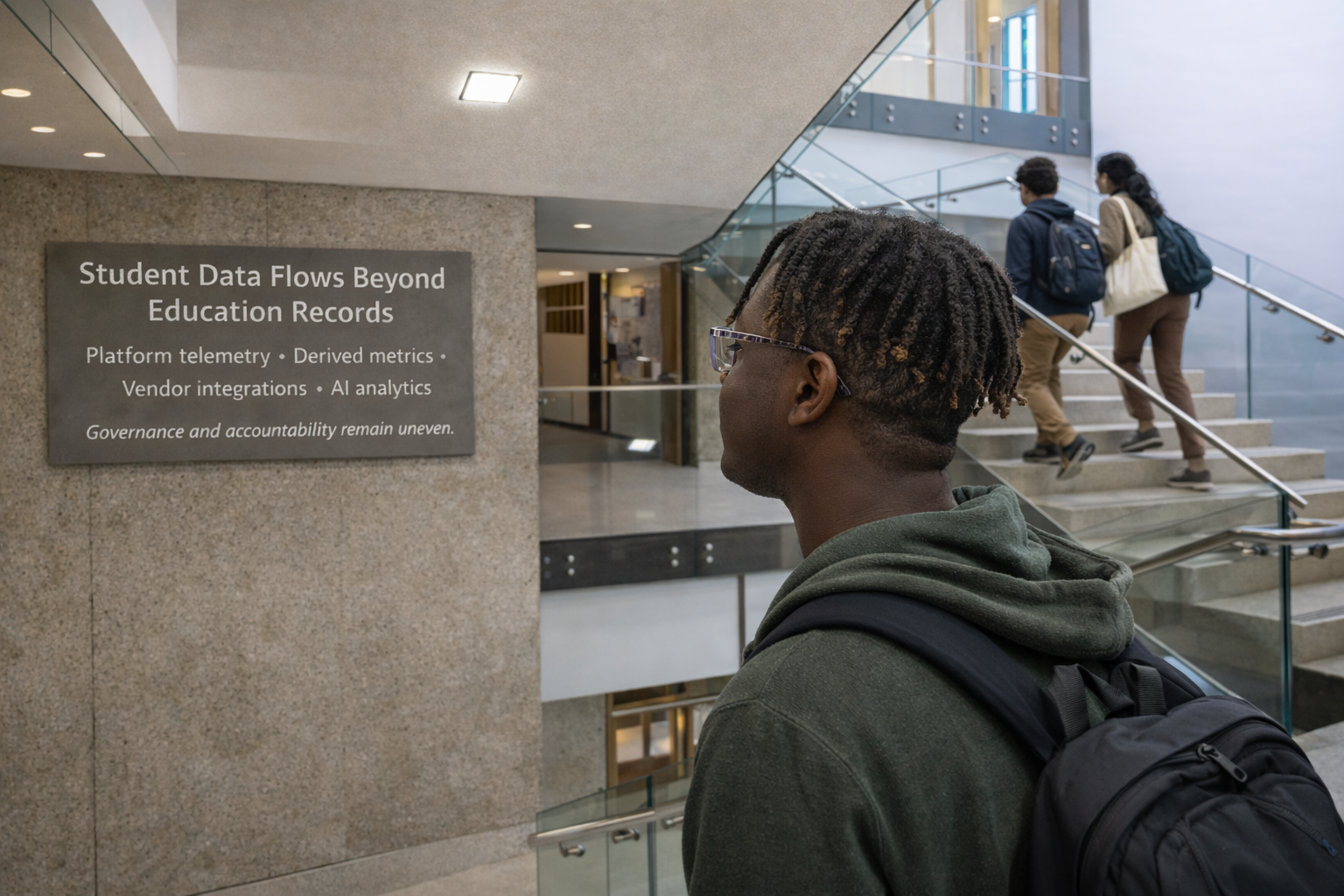

Universities increasingly rely on learning platforms, analytics tools, and AI-enabled services that generate student activity telemetry and derived inferences like engagement metrics and risk flags. Because much of this platform-derived and AI-related data is not consistently treated as part of the formal “education record,” it can fall into a regulatory gray zone that weakens transparency, limits on secondary use, retention controls, and accountability as data move through vendors, APIs, and integrated tools. This memo proposes a federal governance framework that shifts from record-based compliance to lifecycle oversight of student-data pipelines, including standardized definitions, consolidated disclosures, enforceable purpose limits (covering de-identified and derived data), mandatory logging and retention rules, and independent audits of high-risk vendor and institutional systems. The framework builds on a CS182W ethical critique of consent- and anonymization-based data-sale proposals by translating autonomy and intellectual-freedom concerns into auditable, implementable requirements.